May 6, 2020

A successful team: "Seeing" robots are conquering the automation sector

Easy control of collaborating robots with vision sensors

Collaborating robots, so-called cobots, support the automation of manual tasks. The easy-to-program cobots from Universal Robots lower the initial hurdle. The combination with VeriSens vision sensors makes an unbeatable team for the easiest application of vision guided robotics.

I see what I do – what is natural to humans is a challenge to robots. Image processing plays a vital part in establishing robotics applications in an increasing number of automation areas. Vision sensors such as the Baumer VeriSens models XF900 and XC900 can play a decisive role in this regard. They combine a complete image processing system in a compact industrial-grade housing and are also easy to parameterize. This considerably facilitates image processing for users. It is therefore logical to combine a VeriSens vision sensor and a Universal Robots cobot into a "seeing" robot that can be set up more easily, quicker, and more precisely programmed than previous solutions.

What are the benefits of image processing for robots?

Robots are guided by taught-in, fixed waypoints to which they travel in sequence. Image processing considerably enhances this function. A simply included vision sensor already allows the robot to reliably identify objects. Simultaneously or alternately, it is also possible to implement quality control that supports the robot by moving the sensor to fixed positions. Utilizing the data in the robot program, objects can also be subsequently sorted automatically.

However, the ultimate application is the control of the robot itself, in which the image processing determines the position of objects, passes this information to the robot, and thereby allows it to freely grab beyond previously fixed waypoints. In this process, objects can be aligned in any position on a surface. The position, rotation, and other optional data such as the location are determined and transferred by image processing.

Interface between VeriSens and Universal Robot

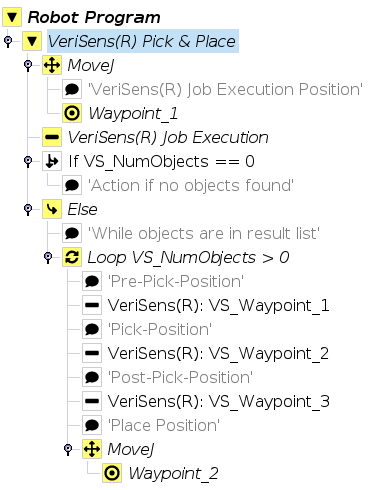

Similar to the "app concept" of smartphones, Universal Robots uses so-called Caps for its certified accessories: software plug-ins that allow the use of extensions such as a VeriSens vision sensor in the Universal Robots PolyScope programming environment.

The image processing task itself is parameterized completely and as usual via the VeriSens Application Suite – independently of the cobot, in the environment best suited for this purpose.

The functions of the VeriSens URCap are generic and thus address all conceivable applications, including the integrated or stationary arrangement of the vision sensor.

In addition to the installation routine configured in the URCap, the programming of the Universal Robot only requires two additional "nodes" (commands) with which the image processing is integrated into the robot programming. For example, for object identification or quality control, a single node is enough to trigger an image processing job on the VeriSens in the robot program and to make the results available as a variable in the program sequence for decision making. This already allows the cobot to sort objects.

For image-based grabbing, the second node is added, which complements the fixed waypoints with dynamic image-based ones. Optionally, a specially created assistant helps with the easy implementation of pick and place applications.

The best thing about automatic calibration

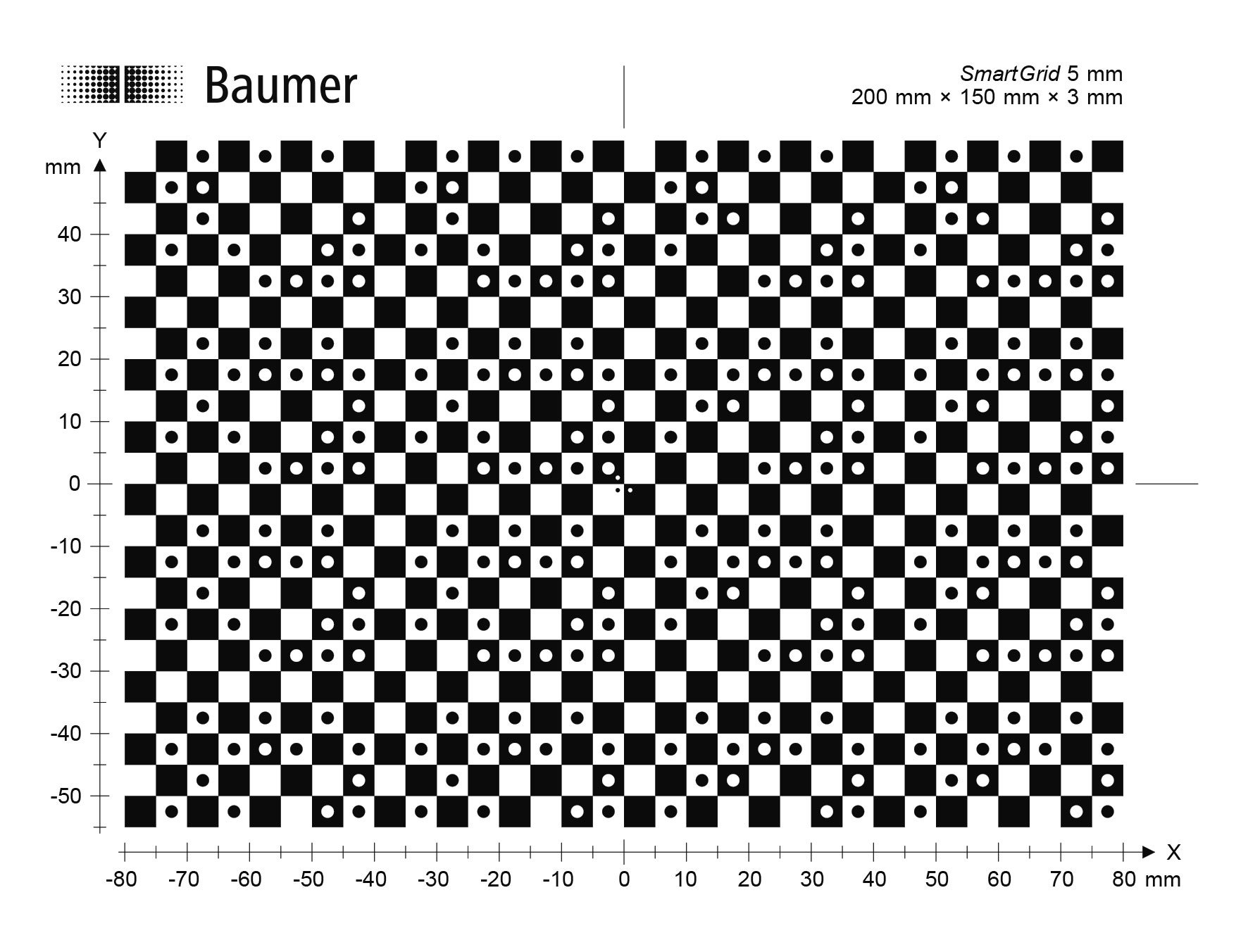

Robots and vision sensors function in their own coordination systems, which only becomes functionally relevant during the transfer of object positions from VeriSens to the cobot. The coordinates of the vision sensor must be converted to comply with the robot coordination system. The necessary coordinate transformation was previously handled as "hand-eye-calibration" by the multiple manual positioning of the cobot with a probe tip on a special calibration target. This means that a process specified by the manufacturer is implemented in many individual steps. This is tedious and also imprecise and error-prone due to the manual intervention of the human control of the probe tip.

With the SmartGrid (patent pending), Baumer is taking an innovative path with automatic calibration for its VeriSens vision sensors – a quantum leap for coordination alignment. The highlight is the intelligent bit pattern that is hidden in the standard checkerboard format. It provides valuable additional information, which the VeriSens can read as an intelligent image processing device. This information includes the position of the vision sensor over the pattern. As the cobot always knows its own coordinates, a limited number of linear and rotation movements are enough to automatically align the coordination systems. Not only is this process very precise and free of manual errors – it is also very easy to implement on the touch screen of the cobot.

2D vision sensor for 3D robots

In terms of coordinates, this solves the problem of finding objects. However, much more can be accomplished with the SmartGrid: VeriSens also uses the grid to learn an ideal image. The vision sensors can then rectify captured images in real time to correct lens distortions, for example. As the bit pattern also provides data regarding size from the respective SmartGrid used, the VeriSens now has all information required for scaling. Conversion into global coordinates is thus already automatically set.

The SmartGrid additionally supports semi-automatic Z calibration, with which the VeriSens can learn its position in space and apply the data from the image rectification in space as well. This resolves the final challenge for vision-guided robotics: The 2D visions sensor has to provide data to a 3D robot. It would not be very user friendly if only the coordinates of a single level, the image level, could be used. A robot in particular also requires coordinates of other levels of the Z axis, e.g., for grabber access or for the detection of important markings. Z calibration makes the automatic alignment of the coordinates to other "heights" possible.

This innovative approach of automatic coordinate alignment, automated real time image rectification, conversion to global coordinates, and Z-calibration now makes vision-guided robotics applications easily accessible to many users, which is in line with the topmost development aim of VeriSens vision sensors – the easiest handling on the market.

Press download

-

Easy control of collaborating robots with vision sensors